Natural Language Processing (NLP)

in Data Science and AI

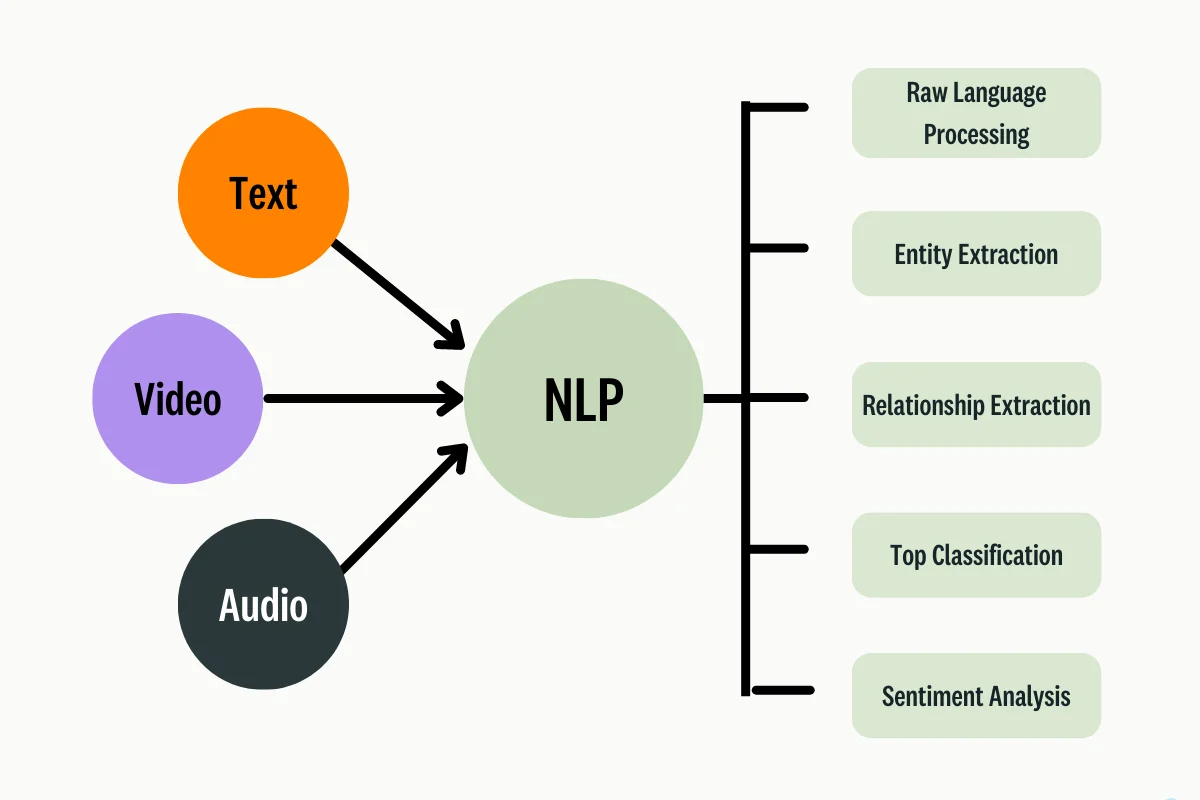

NLP bridges the gap between human language and machines, enabling them to read, understand, and generate text and speech.

It powers essential applications like chatbots, virtual assistants, sentiment analysis, machine translation, and more.

NLP is critical for extracting insights from unstructured text data, which forms the majority of human communication.

In business and data science, NLP is used for automated document processing, feedback analysis, and trend detection.

Modern AI models like GPT, BERT, and T5 are built on NLP principles and Transformer architectures.

NLP enables voice-based interfaces and accessibility tools, expanding how users interact with software and services.

NLP drives innovation in healthcare (clinical notes), finance (report summarization), and law (contract analysis).

NLP supports search engines, recommendation systems, and even fraud detection through pattern recognition.

It is key to multilingual AI, making global communication seamless across languages.

Understanding NLP equips learners with the skills to build intelligent language-aware applications in today’s AI ecosystem.

Module 1: Introduction to NLP

What is NLP? Definition and goals

Applications of NLP in real-world systems

Challenges in NLP (ambiguity, context, domain)

Structured vs unstructured data

Text classification vs sequence-to-sequence tasks

Module 2: Text Preprocessing

Text normalization:

Lowercasing, punctuation removal

Stop word removal

Tokenization (word-level, subword-level, sentence-level)

Stemming vs Lemmatization

Removing noise and special characters

Spelling correction and slang handling

Module 3: Text Representation Techniques

Bag of Words (BoW)

Term Frequency-Inverse Document Frequency (TF-IDF)

Word embeddings:

Word2Vec (CBOW & Skip-gram)

GloVe

FastText

Document embeddings and sentence vectors

Module 4: Syntax and Parsing

Part-of-Speech (POS) tagging

Named Entity Recognition (NER)

Dependency parsing

Constituency parsing

Chunking and shallow parsing

Module 5: Language Modeling

What is a language model?

N-gram models and their limitations

Perplexity and smoothing

Neural language models:

RNN, LSTM, GRU

Transformer basics

Module 6: Sentiment Analysis & Text Classification

Binary and multi-class sentiment classification

Rule-based vs ML-based approaches

Logistic regression, Naive Bayes, SVM

Deep learning for classification (CNN, RNN)

Evaluation metrics: accuracy, F1, precision, recall

Module 7: Sequence Modeling

Sequence labeling: NER, POS tagging

Sequence-to-sequence tasks: translation, summarization

RNNs and LSTMs in sequence modeling

Encoder-decoder architecture

Module 8: Machine Translation

Rule-based and Statistical Machine Translation (SMT)

Neural Machine Translation (NMT)

BLEU score and evaluation metrics

Transformer-based translation models

Module 9: Topic Modeling

Latent Semantic Analysis (LSA)

Latent Dirichlet Allocation (LDA)

NMF (Non-negative Matrix Factorization)

Visualizing and interpreting topics

Use in document clustering and trend analysis

Module 10: Question Answering & Chatbots

Types of QA systems: extractive vs generative

QA datasets (SQuAD, HotpotQA)

Contextual understanding using BERT

Chatbot architecture:

Rule-based

Retrieval-based

Generative (transformer-based)

Module 11: Transformer Models in NLP

Transformer architecture deep dive

Pre-trained models:

BERT, RoBERTa, DistilBERT

GPT, T5, XLNet, ALBERT

Fine-tuning vs feature-based approaches

Hugging Face Transformers library

Module 12: Information Extraction & Text Mining

Named Entity Recognition (NER) revisited

Relation extraction

Event and fact extraction

Text summarization:

Extractive vs abstractive

Keyword extraction (RAKE, TextRank)

Module 13: Multilingual NLP

Cross-lingual embeddings

Multilingual BERT (mBERT)

Translation tools and datasets

Transfer learning across languages

Low-resource language modeling

Module 14: Evaluation and Ethics in NLP

Model evaluation metrics for NLP tasks

Hallucination and factual consistency

Bias and fairness in language models

Toxicity detection and content moderation

Ethical data sourcing and annotation

Module 15: Tools, Frameworks, and Libraries

NLTK and SpaCy

Gensim for topic modeling

Hugging Face Transformers

OpenAI and Cohere APIs

LangChain for LLM-powered NLP

Module 16: Projects & Case Studies

Sentiment analysis on real-world reviews

Resume/job description matching engine

Customer support chatbot using RAG

Text summarizer using BERT

Multilingual Q&A system