Large Language Model(LLM)

in Data Science and AI

LLMs power today’s most advanced AI systems, including chatbots, copilots, search engines, and creative tools.

They understand and generate human-like text, enabling applications in customer support, content creation, summarization, and more.

LLMs like GPT, LLaMA, Claude, and Gemini perform zero-shot and few-shot learning, allowing flexible use without retraining.

They support natural language interfaces to databases, APIs, and applications—transforming how users interact with software.

LLMs have revolutionized data preprocessing, analysis, and automation across data science workflows.

They are foundational to generative AI, used in text-to-image, text-to-code, and multimodal applications.

LLMs can be fine-tuned or prompted to specialize in domain-specific tasks such as legal advice, education, or programming.

Their underlying architecture—Transformers—is now a standard in NLP and even in computer vision and audio processing.

Open-source LLMs are fueling innovation in enterprise and research, making cutting-edge AI accessible and modifiable.

Mastering LLMs empowers learners to build, evaluate, and deploy modern AI applications responsibly and efficiently.

Module 1: Introduction to LLMs

What are Large Language Models?

LLMs vs traditional NLP pipelines

Real-world applications of LLMs

Evolution: BERT → GPT → LLaMA → GPT-4 / Claude / Gemini

Generative AI and LLMs

Module 2: NLP Foundations for LLMs

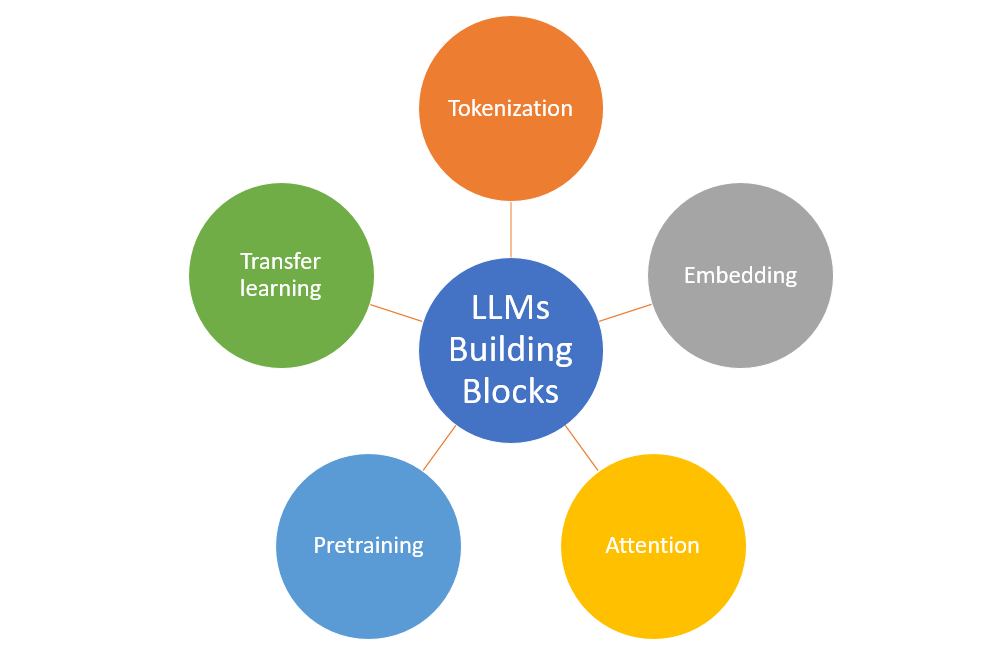

Text preprocessing and tokenization

Embeddings and vector space models

Language modeling: N-grams vs neural models

Attention mechanism: motivation and benefits

Module 3: Transformer Architecture

Self-attention and multi-head attention

Encoder-decoder vs decoder-only models

Positional encoding

Layer normalization, residual connections

Pre-training and fine-tuning process

Module 4: Types of LLMs

Autoregressive Models: GPT, Mistral, LLaMA

Masked Language Models: BERT, RoBERTa

Instruction-Tuned Models: ChatGPT, Claude

Multimodal Models: Gemini, GPT-4o

Open-Source Models: Falcon, Mistral, LLaMA-3

Module 5: Tokenization and Embedding

Byte Pair Encoding (BPE), SentencePiece

Vocabulary size and trade-offs

Embedding layers in LLMs

Role of context length

Module 6: Training and Scaling Laws

Pre-training objective (causal, masked, contrastive)

Datasets used for pretraining (Common Crawl, Wikipedia, etc.)

Scaling laws for compute, data, and model size

Training infrastructure and parallelism

Module 7: Prompt Engineering

What is prompting?

Zero-shot, one-shot, and few-shot examples

Chain-of-thought (CoT) prompting

Role prompting, system prompts

Prompt injection and security risks

Tools: LangChain, Guidance, PromptFlow

Module 8: Fine-Tuning and Adaptation

Full fine-tuning vs parameter-efficient tuning (LoRA, QLoRA, PEFT)

Instruction tuning and supervised fine-tuning (SFT)

Reinforcement Learning with Human Feedback (RLHF)

Dataset design for custom LLMs

Evaluation and safety tuning

Module 9: Inference and Deployment

GPU/TPU requirements and inference strategies

Quantization and model compression (8-bit, 4-bit)

Using LLMs via API (OpenAI, Hugging Face, Cohere)

Local deployment of open-source models

Streaming and low-latency response tuning

Module 10: Evaluation of LLMs

Accuracy, coherence, hallucination rate

BLEU, ROUGE, perplexity, BERTScore

Human evaluation methods

Benchmarks: HELM, MMLU, TruthfulQA

Module 11: Building Applications with LLMs

Text summarization, rewriting, translation

Code generation and explanation (Code LLMs)

Semantic search with vector databases (FAISS, Chroma)

Chatbots and virtual assistants

Agent frameworks: LangChain Agents, AutoGPT, CrewAI

Module 12: Tools & Ecosystem

Hugging Face Transformers and Datasets

OpenAI Assistants API

LangChain, LlamaIndex, Vector DBs

Gradio, Streamlit, FastAPI

MLflow and Weights & Biases for tracking

Module 13: Multimodal and Vision-Language LLMs

Introduction to multimodal transformers

Image captioning (BLIP, Flamingo)

Text-to-image (DALL·E, Stable Diffusion)

Vision-Language models (GPT-4o, Gemini)

Speech and audio with Whisper, Bark

Module 14: Ethical, Legal, and Social Implications

Bias and fairness in LLMs

Hallucinations and misinformation

Copyright and licensing issues

Open-weight vs closed-weight models

Regulatory frameworks (EU AI Act, NIST)

Module 15: Capstone Projects and Case Studies

Build a custom Q&A chatbot using OpenAI API

Deploy a local open-source LLM using Hugging Face

Fine-tune a model using PEFT on a domain-specific dataset

Integrate LLM with search using RAG (Retrieval Augmented Generation)

Build a generative agent with memory and context