DEEP LEARNING

in Data Science and AI

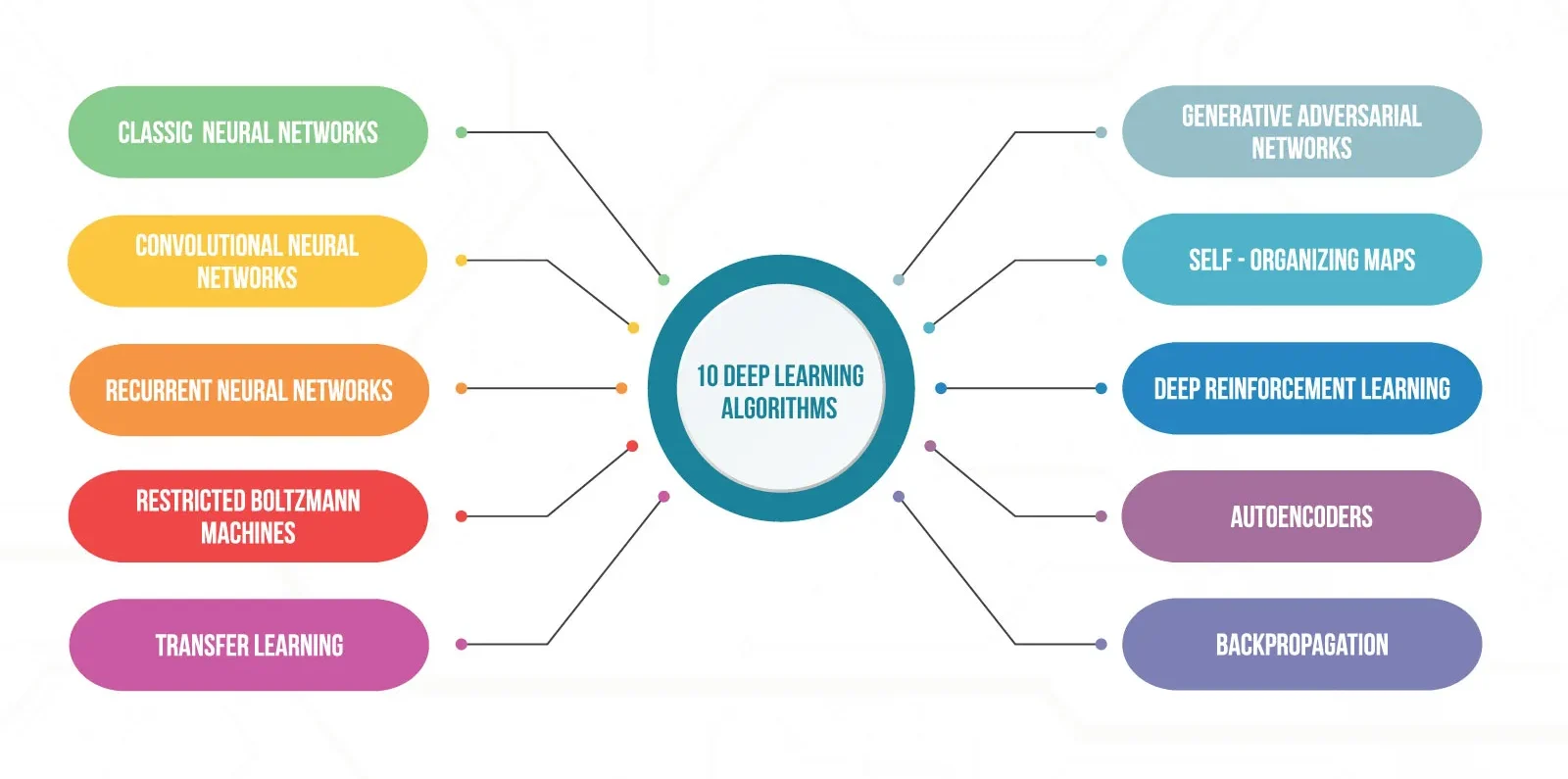

Deep Learning (DL) enables machines to learn complex patterns from large amounts of data, outperforming traditional ML in many areas.

It powers state-of-the-art AI systems in computer vision, speech recognition, NLP, and generative AI.

DL models can automatically perform feature extraction, eliminating the need for manual feature engineering.

Architectures like CNNs and RNNs specialize in handling images, videos, time series, and sequential data.

DL is at the core of modern breakthroughs like ChatGPT, AlphaGo, autonomous driving, and DeepFake generation.

It enables transfer learning, where pre-trained models can solve new tasks with minimal data and compute.

Frameworks like TensorFlow and PyTorch make DL development accessible and scalable.

DL fuels innovation in healthcare, robotics, fintech, and education, pushing the frontiers of AI.

It supports the development of reinforcement learning agents in gaming, robotics, and simulations.

Deep Learning is essential for building end-to-end intelligent systems that can sense, understand, and act.

Module 1: Introduction to Deep Learning

What is Deep Learning?

Deep Learning vs Machine Learning

History and evolution of DL

Applications of DL in AI

DL frameworks: TensorFlow, PyTorch, Keras

Module 2: Math Foundations for Deep Learning

Vectors, matrices, and tensors

Linear transformations

Derivatives and gradients

Chain rule and backpropagation

Activation functions: Sigmoid, Tanh, ReLU, Leaky ReLU, Softmax

Module 3: Neural Networks Basics

Perceptron and multi-layer perceptrons (MLPs)

Forward propagation

Loss functions: MSE, Cross-Entropy

Backpropagation and weight updates

Optimization algorithms:

Gradient Descent

SGD, Adam, RMSprop

Module 4: Building Neural Networks with Keras/PyTorch

Model architecture using

Sequentialand functional APICompiling and training a model

Evaluating performance

Saving and loading models

Custom training loops (PyTorch)

Module 5: Regularization and Optimization

Overfitting vs underfitting

Regularization techniques:

L1, L2

Dropout

Early stopping

Batch normalization

Learning rate scheduling

Module 6: Convolutional Neural Networks (CNNs)

Convolution operation and filters

Padding, stride, pooling

Building CNNs in Keras/PyTorch

Image classification with CNN

Transfer learning with pre-trained models (VGG, ResNet)

Module 7: Recurrent Neural Networks (RNNs)

Introduction to sequence modeling

RNN architecture and limitations

Long Short-Term Memory (LSTM)

Gated Recurrent Units (GRU)

Applications in time series, speech, and text

Module 8: Natural Language Processing with DL

Tokenization and word embeddings (Word2Vec, GloVe)

Embedding layers

Sentiment analysis with LSTM

Attention mechanism basics

Introduction to Transformers (BERT, GPT overview)

Module 9: Generative Models

Autoencoders:

Undercomplete and Denoising

Variational Autoencoders (VAEs)

Generative Adversarial Networks (GANs):

Generator vs Discriminator

Applications: Deepfakes, image generation

Module 10: Deployment and Inference

Converting models for deployment

TensorFlow Lite, ONNX, TorchScript

Inference optimization for edge devices

Model serving with Flask / FastAPI

Using DL models in web apps or mobile apps

Module 11: Tools and Libraries

TensorBoard for visualization

Weights & Biases or MLflow for tracking experiments

GPU acceleration with CUDA and cuDNN

Hugging Face Transformers library (intro)

Module 12: Advanced Topics

Attention and Transformers in detail

BERT, GPT, Vision Transformers

Reinforcement Learning introduction

Neural Architecture Search

Large Language Models (LLMs) overview

Module 13: Real-world Projects

Image classification on CIFAR-10 / MNIST

Sentiment classification with IMDB/Yelp dataset

Object detection (YOLO or SSD intro)

Time series forecasting (stock prices, sensors)

Build a chatbot with deep learning