PyTorch

in Data Science and AI

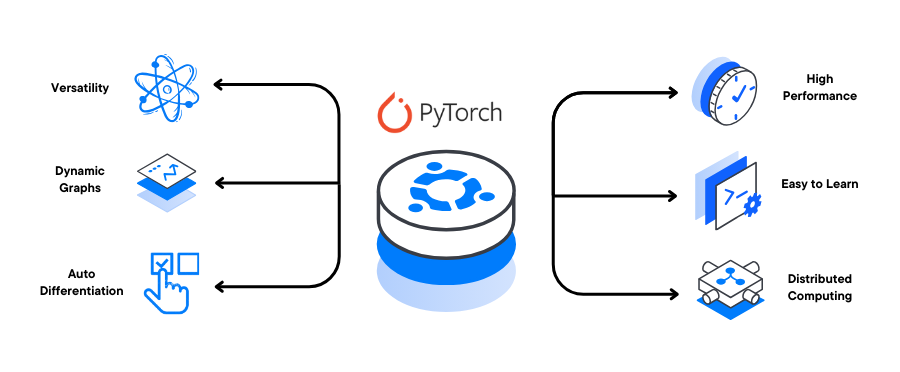

PyTorch is a leading deep learning framework known for its flexibility, ease of use, and dynamic computation graph.

It supports eager execution, making debugging and experimentation intuitive and Pythonic. PyTorch has become the de facto standard in academic research, especially for AI, ML, NLP, and vision tasks.

It powers production-ready systems with TorchScript, ONNX export, and mobile deployment capabilities.

PyTorch integrates seamlessly with Python libraries like NumPy, Pandas, and Scikit-learn for end-to-end ML workflows.

It supports automatic differentiation and GPU acceleration, making model training efficient and scalable.

PyTorch provides modular APIs—

torch.nn,torch.optim,torchvision,torchtext—to build any ML/DL system.It is used behind cutting-edge models like GPT, BERT, ResNet, YOLO, and in AI companies including Meta, OpenAI, and Tesla.

PyTorch’s open-source ecosystem and active community offer state-of-the-art models and reproducible research.

Learning PyTorch gives students strong foundations in building, training, and deploying AI models from scratch.

Module 1: Introduction to PyTorch

What is PyTorch?

PyTorch vs TensorFlow

Installing PyTorch (CPU/GPU)

Overview of key libraries:

torch,torch.nn,torchvision

Module 2: Tensors and Operations

Creating tensors: from lists, arrays, random values

Tensor properties: shape, dtype, device

Basic tensor operations: arithmetic, broadcasting

Indexing, slicing, reshaping

Converting between NumPy and Torch

Module 3: Automatic Differentiation with Autograd

Introduction to

torch.autogradComputing gradients with

.backward()Computational graph explanation

Using

requires_grad,detach()Chain rule and gradient flow in networks

Module 4: Building Neural Networks

Using

torch.nn.ModuleDefining

__init__andforward()methodsLayers:

Linear,ReLU,Sigmoid,SoftmaxModel parameters and initialization

Saving and loading models

Module 5: Training a Neural Network

The training loop:

Forward pass

Loss computation

Backward pass

Weight updates

Optimizers: SGD, Adam, RMSprop (

torch.optim)Loss functions: MSE, CrossEntropy, BCE

Evaluation metrics: accuracy, loss

Module 6: Working with Datasets and DataLoaders

Using

torch.utils.data.DatasetandDataLoaderBuilt-in datasets from

torchvisionandtorchtextCreating custom datasets

Transformations and augmentation (

transforms)Batching, shuffling, and prefetching

Module 7: Computer Vision with PyTorch

Introduction to

torchvision.modelsCNN layers:

Conv2D,MaxPool2D,DropoutBuilding CNNs from scratch

Transfer learning with ResNet, VGG

Image classification pipeline (CIFAR-10, MNIST)

Module 8: NLP with PyTorch

Tokenization and word embeddings

Using

torchtextand pre-trained embeddings (GloVe)Text classification using RNNs/LSTMs

Building simple Seq2Seq models

Sentiment analysis project

Module 9: Customizing and Debugging Models

Writing custom layers and loss functions

Registering parameters

Using hooks and model introspection

Model visualization with TensorBoard and

torchviz

Module 10: Advanced Deep Learning

Building RNN, GRU, and LSTM networks

Attention mechanism basics

Transformers with

torch.nn.TransformerTraining large models with gradient clipping

Layer normalization, batch normalization

Module 11: Deployment and Inference

Model serialization (

torch.save,torch.load)Exporting with TorchScript and ONNX

Deploying models to mobile and edge devices

Building APIs with Flask/FastAPI for inference

Module 12: GPU Acceleration and Performance Tuning

Using

.to(device)for GPU trainingMulti-GPU training with

DataParallelandDDPMemory optimization tips

Mixed precision training with

torch.cuda.amp

Module 13: Reinforcement Learning

Basic concepts: agents, rewards, environment

Building an RL agent with PyTorch

Using

OpenAI GymPolicy gradients (REINFORCE)

Module 14: PyTorch Ecosystem Overview

TorchVision for vision tasks

TorchText for NLP

TorchAudio for speech

PyTorch Lightning (optional) for research workflows

Module 15: Capstone Projects

Image classifier (CIFAR-10 or custom dataset)

Text classification using LSTM

Transfer learning for object detection

Deploy a PyTorch model via Flask API

Visualize filters and activations in CNNs

Module 16: Projects & Case Studies

Sentiment analysis on real-world reviews

Resume/job description matching engine

Customer support chatbot using RAG

Text summarizer using BERT

Multilingual Q&A system