GEN AI

in Data Science and AI

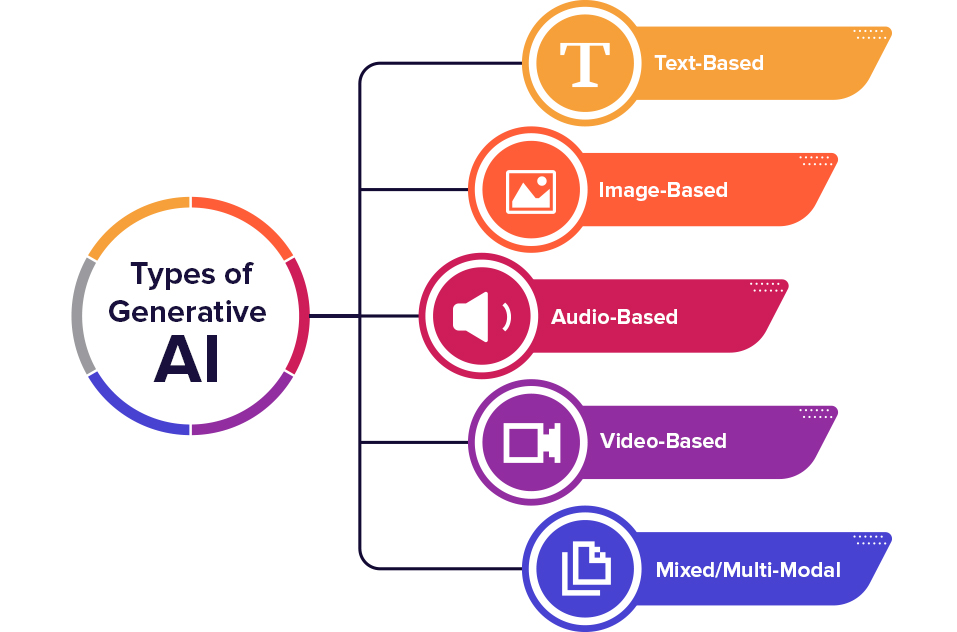

Generative AI creates new content—text, images, audio, video, and code—revolutionizing how humans and machines interact.

It powers large language models (LLMs) like GPT, Claude, LLaMA, which are used in AI assistants, chatbots, and productivity tools.

GenAI enables rapid prototyping, automation, and personalization, reducing manual effort in creative and cognitive tasks.

It enhances traditional AI pipelines by enabling data augmentation, simulation, and synthetic data generation.

In industries like healthcare and finance, GenAI helps in creating medical records, reports, and summarizing complex data.

It supports natural language interfaces for querying databases, coding, and automating workflows—key for next-gen AI apps.

GenAI models exhibit zero-shot and few-shot learning, making them versatile across a wide range of tasks without retraining.

It is a cornerstone for the rise of multimodal AI that combines text, image, speech, and video understanding.

GenAI unlocks creative AI—from AI-generated art to music, writing, and game design—blending tech and creativity.

Understanding GenAI is essential to build, fine-tune, and safely deploy modern AI systems in real-world products and services.

Module 1: Introduction to Generative AI

What is Generative AI?

Generative AI vs Discriminative AI

Historical evolution: from GANs to LLMs

Applications across industries (text, image, video, music)

Challenges and ethical considerations

Module 2: Foundations of Generative Models

Generative vs predictive modeling

Probability and likelihood basics

Maximum likelihood estimation (MLE)

Bayesian inference (brief overview)

Data distribution modeling

Module 3: Traditional Generative Models

Gaussian Mixture Models (GMM)

Hidden Markov Models (HMM)

Naive Bayes as a generative classifier

Module 4: Deep Generative Models

Introduction to neural network-based generation

Autoencoders:

Architecture and use cases

Denoising Autoencoders

Variational Autoencoders (VAEs)

Generative Adversarial Networks (GANs):

Generator vs Discriminator

Vanilla GAN, DCGAN, Conditional GAN

CycleGAN, StyleGAN (overview)

Module 5: Language Modeling & NLP Foundations

What is a language model?

N-gram models and limitations

Introduction to embeddings:

Word2Vec, GloVe, FastText

Sequence modeling:

RNNs, LSTM, GRU

Module 6: Transformer Architecture

The Attention mechanism

Scaled Dot-Product Attention

Multi-Head Attention

Encoder-Decoder structure

Positional encoding

Why Transformers replaced RNNs

Module 7: Large Language Models (LLMs)

Understanding GPT architecture

GPT-2 vs GPT-3 vs GPT-4

Tokenization and vocabulary

Pre-training vs fine-tuning

Zero-shot, one-shot, few-shot learning

Popular LLMs: OpenAI GPT, LLaMA, Mistral, Claude, Gemini

Module 8: Prompt Engineering

What is a prompt?

Prompt design principles

Chain-of-thought prompting

Role prompting and system messages

Prompt tuning vs fine-tuning

Tools: LangChain, LlamaIndex, Flowise (intro)

Module 9: Fine-Tuning and Adaptation

Parameter-efficient tuning methods:

LoRA, PEFT, QLoRA

Supervised Fine-Tuning (SFT)

Reinforcement Learning with Human Feedback (RLHF)

Transfer learning in GenAI

Module 10: Evaluation of Generative Models

Evaluation metrics for text:

BLEU, ROUGE, METEOR, perplexity

Evaluation metrics for images:

FID, IS (Inception Score)

Human evaluation

Alignment and hallucination detection

Module 11: Tools and Frameworks

OpenAI API (GPT-4, Assistants API)

Hugging Face Transformers & Datasets

LangChain, Vector databases (FAISS, Chroma)

Gradio, Streamlit for GenAI apps

Google Colab / Kaggle Notebooks

Module 12: Multimodal Generative AI

What is multimodal learning?

Image generation: DALL·E, Midjourney, Stable Diffusion

Text-to-speech and speech-to-text: Whisper, ElevenLabs

Vision-language models (CLIP, Flamingo, Gemini)

Video generation (intro only)

Module 13: Responsible and Ethical GenAI

Hallucination and misinformation risks

Bias and fairness in generation

Safety and content filtering

Copyright, plagiarism, deepfakes

Governance and regulations (EU AI Act, OpenAI guidelines)

Module 14: GenAI Applications & Projects

AI-powered chatbots and copilots

Summarization and content rewriting

Text-to-image generation app

Code generation and debugging

Resume, email, or blog writing assistants

Project: Build a mini AI assistant using OpenAI API + LangChain